Background

Kubernetes is a new cool kid in the cloud infrastructure world. It is an open-source container orchestration system for automating software deployment, scaling, and management. Nowadays, when we manage our Cloud infrastructure, we have to look after cloud resources such as storage, databases and message queues. In addition, we also need to manage all the stuff within Kubernetes.

In Google Cloud(GCP) world, Terraform becomes the default tool to manage the infrastructure. Engineers use Terraform to deploy GKE, the managed Kubernetes service on GCP. From here, they go to different routes:

- Use Terraform to manage GCP cloud resources and Kubectl plus YAML to manage Kubernetes resources

- Use Terraform to manage both GCP cloud resources and Kubernetes resources using Kubernetes Provider

YAML lovers ask: Is there a way to use YAML to deploy GCP cloud resources?

Yes, Google recently released a service called Config Connector that can make it happen.

Introduce Config Connector

Config Connector is a Kubernetes add-on that allows you to manage GCP resources through Kubernetes. Config Connector provides a collection of Kubernetes Custom Resource Definitions (CRDs) and controllers. The Config Connector CRDs allow Kubernetes to create and manage Google Cloud resources when configuring and applying Objects to your cluster.

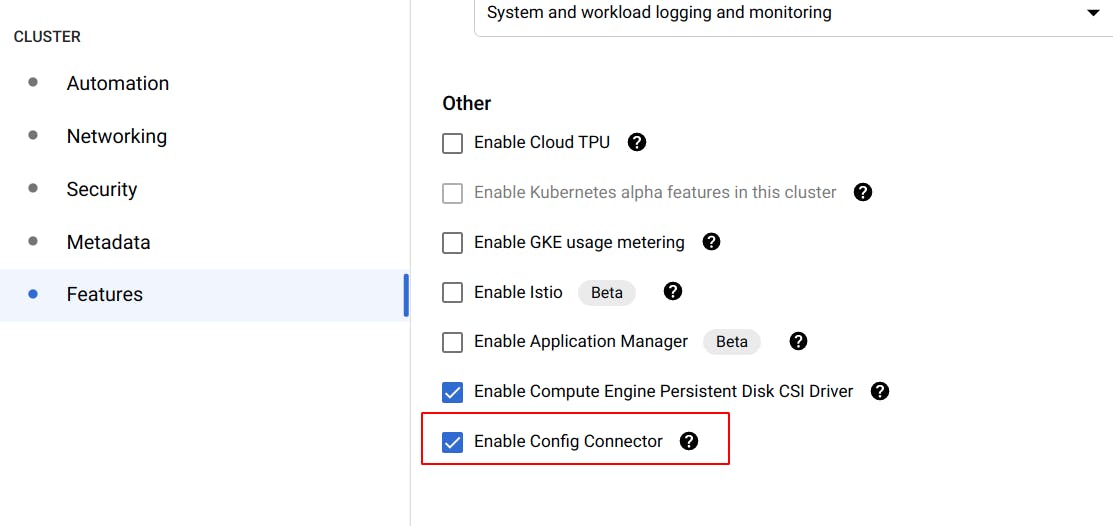

Enable Config Connector

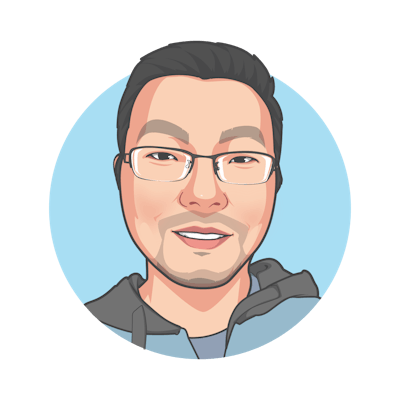

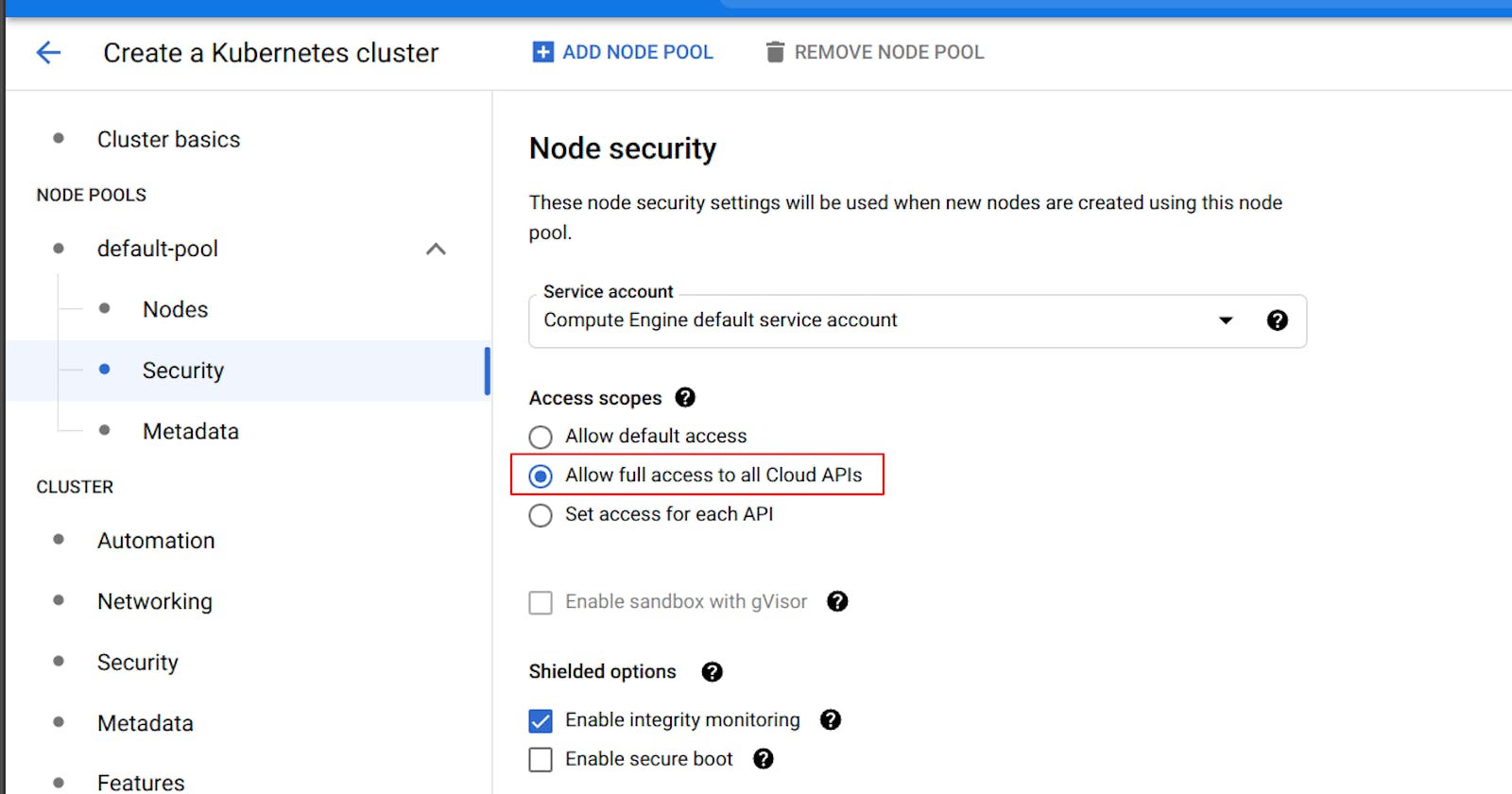

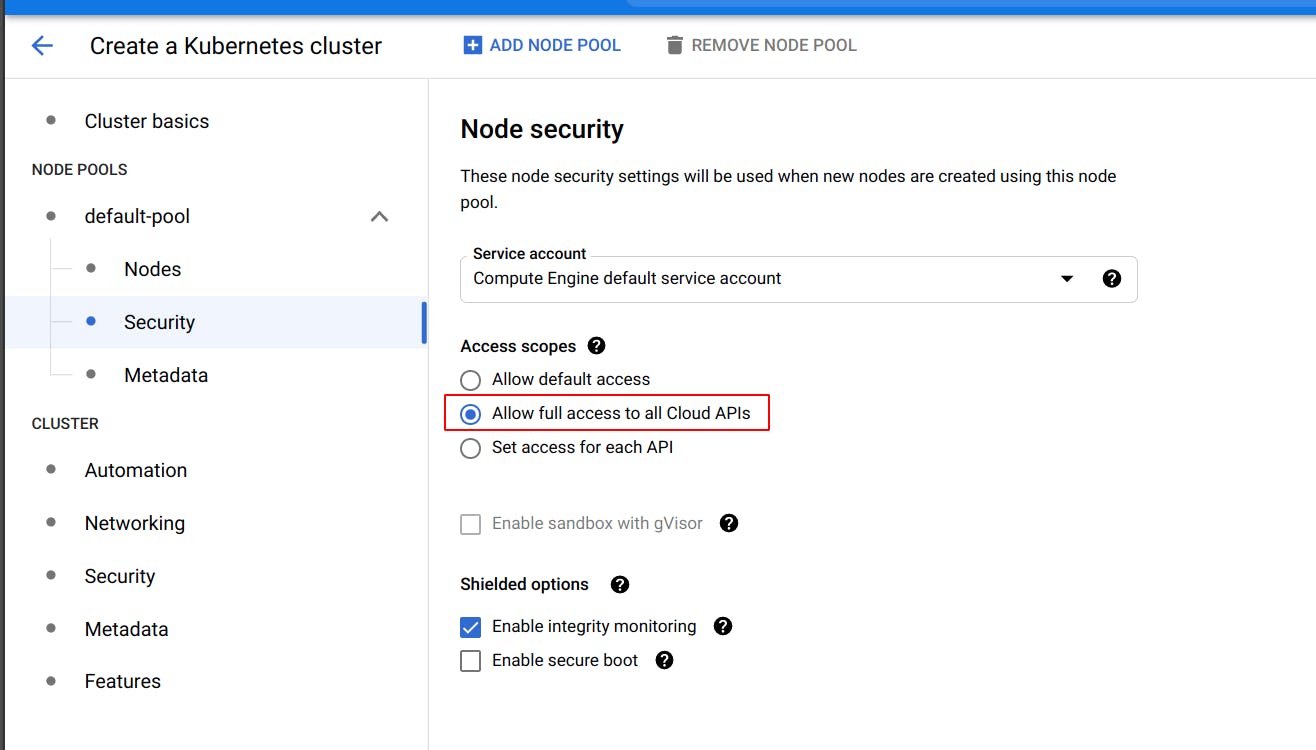

To enable Config Connector when creating a GKE cluster:

- Allow Cloud APIs from Access Scopes

- Enable Workload Identity to allow workloads in your GKE clusters to impersonate Identity and Access Management (IAM) service accounts to access Google Cloud services

- Enable Config Connector

If there is a GKE already, follow this link.

Use Config Connector to manage BigQuery datasets

Create a BigQuery Dataset

Use YAML file below:

apiVersion: bigquery.cnrm.cloud.google.com/v1beta1

kind: BigQueryDataset

metadata:

annotations:

cnrm.cloud.google.com/delete-contents-on-destroy: "false"

cnrm.cloud.google.com/deletion-policy: abandon

cnrm.cloud.google.com/project-id : airflow-talk

name: test-bq-dataset

spec:

resourceID: my_test_dataset

location: US

defaultTableExpirationMs: 86400000

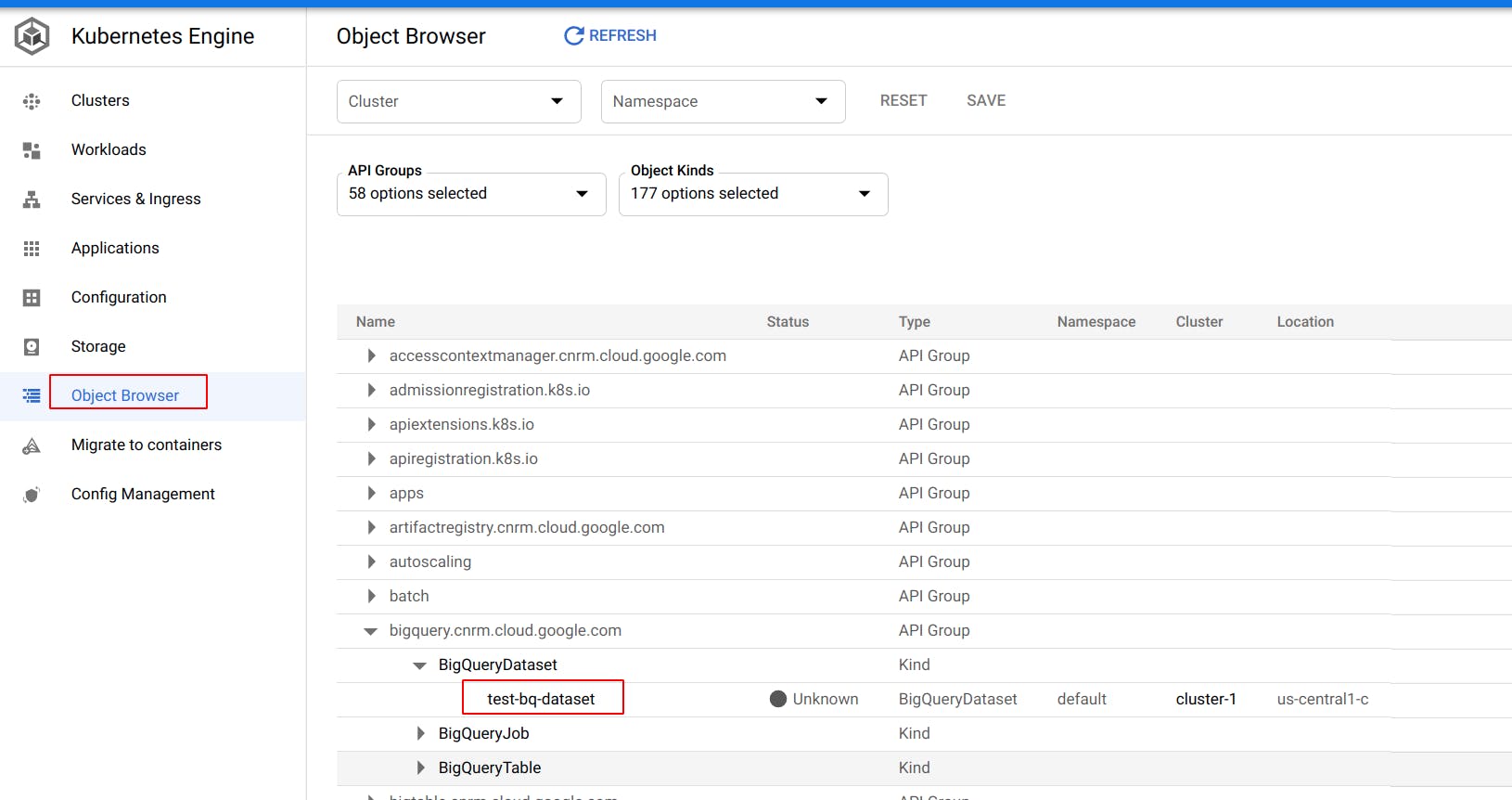

Check the deployment

Run kubectl describe bigquerydataset test-bq-dataset

From UI:

Delete the Dataset

Run kubectl delete -f create-bq.yaml

To keep the Dataset, follow this guide.

In short, if cnrm.cloud.google.com/delete-contents-on-destroy: "false", then the Dataset will remain, otherwise Config Connector will delete the Dataset.

Key learning

Config Connector is a new tool to manage GCP resources in the Kubernetes way. However, weather Config Connector should be used instead of Terraform, it is another topic for another day...