Photo by Rubaitul Azad on Unsplash

Cloud Composer Airflow and BigQuery External Table with Google Sheets

BigQuery has a useful feature which allows the user to create external table with data on Google Sheets. It is very convenient because BigQuery users can query the data from Google Sheets directly. However, as a data engineer, you may need to build pipelines to interact with the data from these external tables.

There are two required changes in your Cloud Composer Airflow to make it possible.

I'll show these changes in a lab.

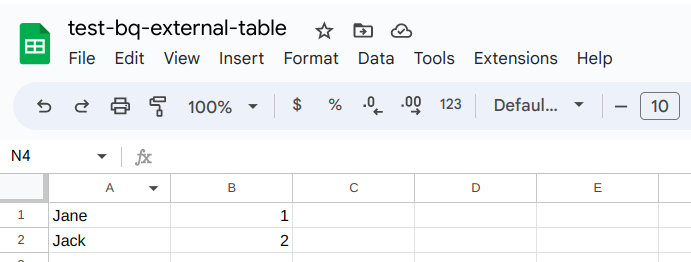

I created a Google Sheets file that looks like this:

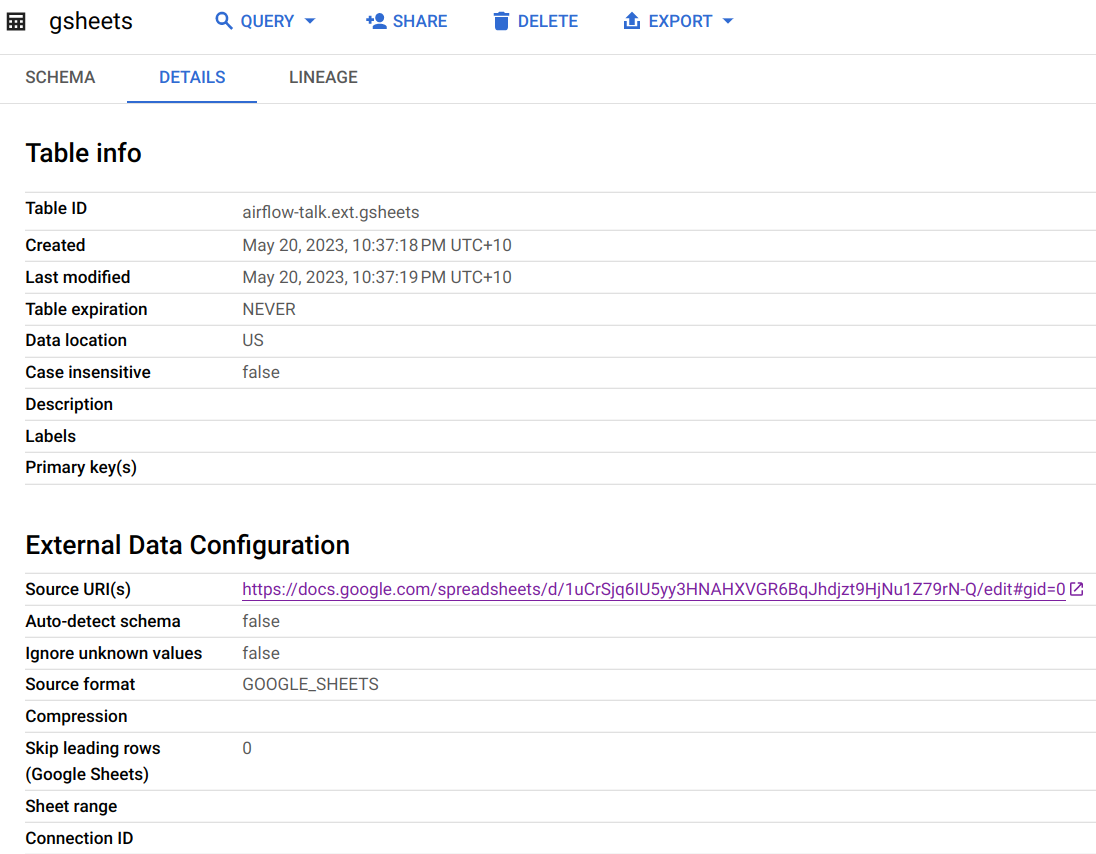

And created a BigQuery that links to this file:

I can query it without issue on BigQuery Console:

Now on the Airflow part, I created a DAG and uploaded it to Cloud Composer:

import datetime

from airflow import DAG

from airflow.operators.empty import EmptyOperator

from airflow.providers.google.cloud.operators.bigquery import BigQueryCheckOperator

with DAG(

dag_id="test-bigquery",

start_date=datetime.datetime(2023, 1, 1),

schedule=None,

):

DATASET = "ext"

TABLE_1 = "gsheets"

location = "US"

check_count = BigQueryCheckOperator(

task_id="check_count",

sql=f"SELECT COUNT(*) FROM {DATASET}.{TABLE_1}",

use_legacy_sql=False,

location=location,

)

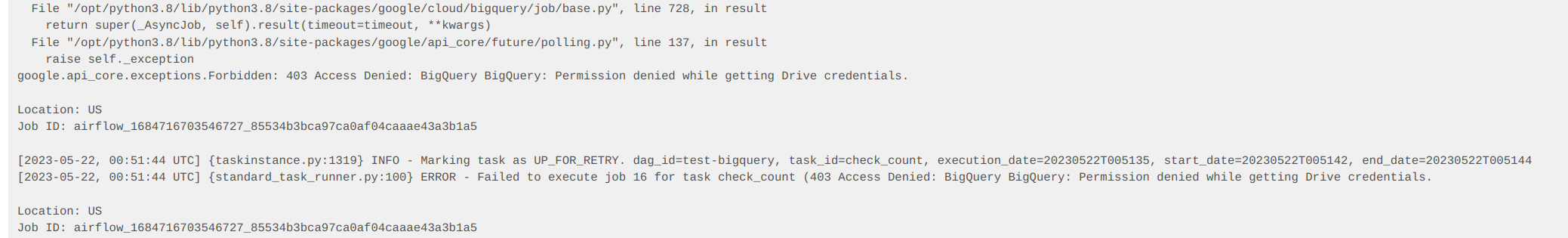

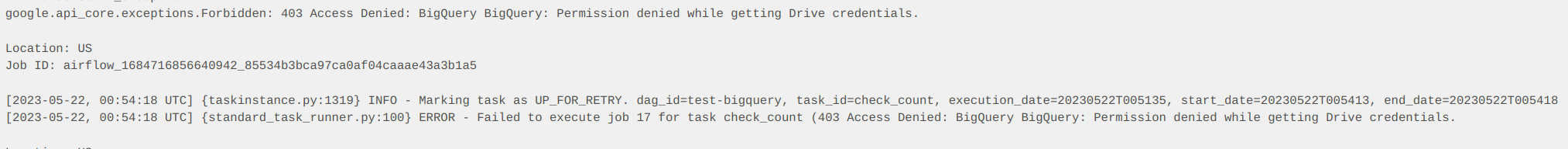

On the first run, Airflow reported an issue:

Diving into the logs, I found that it is about missing Google Drive access. It makes sense because Google Sheets data is stored on Google Drive.

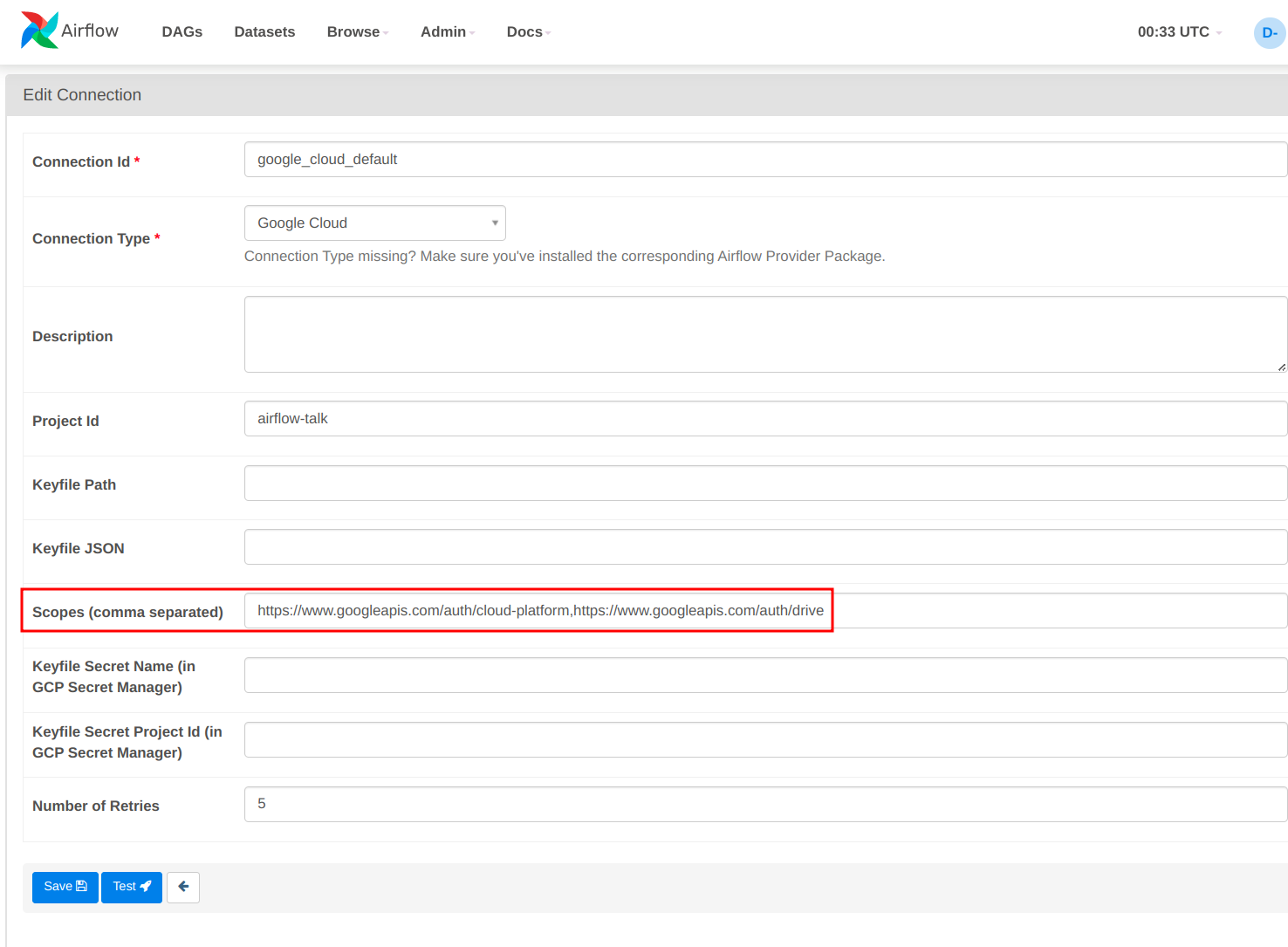

This error is understandable because, by default, Airflow uses google_cloud_default Connection, which doesn't have Google Drive API scope [1]. So I added it:

(Note that you'll need to add cloud-platform in the scope because you don't want to lose access to Google Cloud.)

I ran the DAG again but still getting issues:

What could be the missing puzzle?

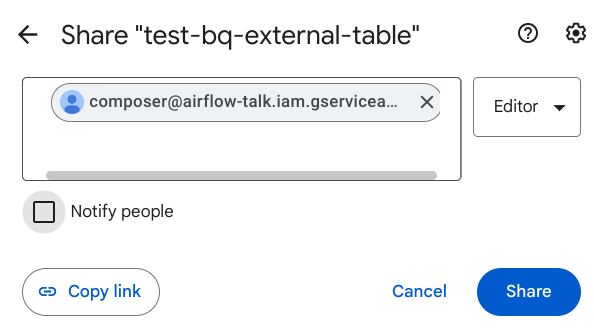

I realized that it could be the permission for the service account to access the Google Sheets file, so I shared the Google Sheets file to the service account from Google Sheets UI:

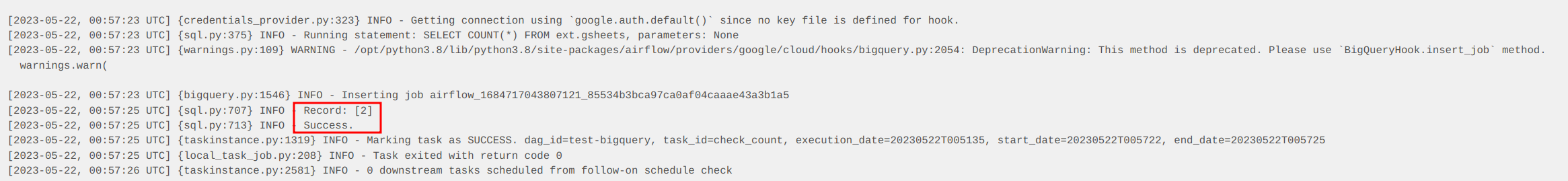

After that, I re-ran the job and it was successful!

Summary:

To access Google Sheets data in Cloud Composer via BigQuery, you will need the following:

Google Drive API scope to be added to your Airflow Connection

File access to be added to the service account that Composer uses